By MATTHEW HOLT

It was not so long ago that you could create one of those maps of health care IT or digital health and be roughly right. I did it myself back in the Health 2.0 days, including the old sub categories of the “Rebel Alliance of New Provider Technologies” and the “Frontier of Patient Empowerment Technologies”

But those easy days of matching a SaaS product to the intended user, and differentiating it from others are gone. The map has been upended by the hurricane that is generative AI, and it has thrown the industry into a state of confusion.

For the past several months I have been trying to figure out who is going to do what in AI health tech. I’ve had lots of formal and informal conversations, read a ton and been to three conferences in the past few months all focused dead on this topic. And it’s clear no one has a good answer.

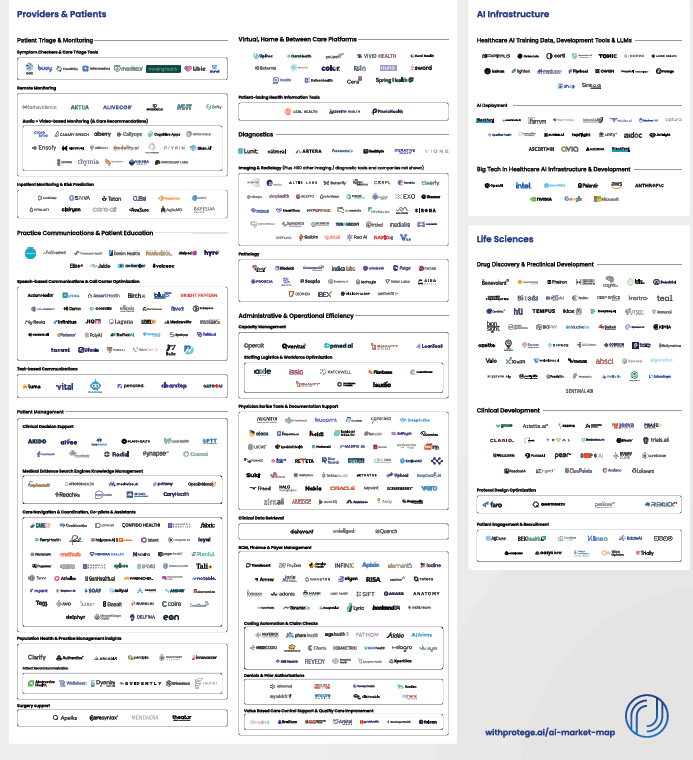

Of course this hasn’t stopped people trying to draw maps like this one from Protege. As you can tell there are hundreds of companies building AI first products for every aspect of the health care value (or lack of it!) chain.

But this time it’s different. It’s not at all clear that AI will stop at the border of a user or even have a clearly defined function. It’s not even clear that there will be an “AI for Health Tech” sector.

This is a multi-dimensional issue.

The main AI LLMs–ChatGPT (OpenAI/Microsoft), Gemini (Google/Alphabet) Claude (Anthropic/Amazon), Grok (X/Twitter), Lama (Meta/Facebook)–are all capable of incredible work inside of health care and of course outside it. They can now write in any language you like, code, create movies, music, images and are all getting better and better.

And they are fantastic at interpretation and summarization. I literally dumped a pretty incomprehensible 26 page dense CMS RFI document into ChatGPT the other day and in a few seconds it told me what they asked for and what they were actually looking for (that unwritten subtext). The CMS official who authored it was very impressed and was a little upset they weren’t allowed to use it. If I had wanted to help CMS, it would have written the response for me too.

The big LLMs are also developing “agentic” capabilities. In other words, they are able to conduct multistep business and human processes.

Right now they are being used directly by health care professionals and patients for summaries, communication and companionship. Increasingly they are being used for diagnostics, coaching and therapy. And of course many health care organizations are using them directly for process redesign.

Meanwhile, the core workhorses of health care are the EMRs used by providers, and the biggest kahuna of them all is Epic. Epic has a relationship with Microsoft which has its own AI play and also has its own strong relationship with OpenAI – or at least as strong as investing $13bn in a non-profit will make your relationship. Epic is now using Microsoft’s AI both in note summaries, patient communications et al, and also using DAX, the ambient AI scribe from Microsoft’s subsidiary Nuance. Epic also has a relationship with DAX rival Abridge

But that’s not necessarily enough and Epic is clearly building its own AI capabilities. In an excellent review over at Health IT Today John Lee breaks down Epic’s non-trivial use of AI in its clincal workflow:

- The platform now offers tools to reorganize text for readability, generate succinct, patient-friendly summaries, hospital course summaries, discharge instructions, and even translating discrete clinical data into narrative instructions.

- We will be able to automatically destigmatize language in notes (e.g., changing “narcotic abuser” to “patient has opiate use disorder”),

- Even as a physician, I sometimes have a hard time deciphering the shorthand that my colleagues so frequently use. Epic showed how AI can translate obtuse medical shorthand-like “POD 1 sp CABG. HD stable. Amb w asst.”-into plain language: “Post op day 1 status post coronary bypass graft surgery. Hemodynamically stable. Patient is able to ambulate with assist.”

- For nurses, ambient documentation and AI-generated shift notes will be available, reducing manual entry and freeing up time for patient care.

And of course Epic isn’t the only EHR (honestly!). Its competitors aren’t standing still. Meditech’s COO Helen Waters gave a wide-ranging interview to HISTalk. I paid particular attention to her discussion of their work with Google in AI and I am quoting almost all of it:

This initial product was built off of the BERT language model. It wasn’t necessarily generative AI, but it was one of their first large language models. The feature in that was called Conditions Explorer, and that functionality was really a leap forward. It was intelligently organizing the patient information directly from within the chart, and as the physician was working in the chart workflow, offering both a longitudinal view of the patient’s health by specific conditions and categorizing that information in a manner that clinicians could quickly access relevant information to particular health issues, correlated information, making it more efficient in informed decision making. <snip>

Beyond that, with the Vertex AI platform and certainly multiple iterations of Gemini, we’ve walked forward to offer additional AI offerings in the category of gen AI, and that includes both a physician hospital course-of-stay narrative at the end of a patient’s time in the hospital to be discharged. We actually generate the course-of-stay, which has been usually beneficial for docs to not have to start to build that on their own.

We also do the same for nurses as they switch shifts. We give a nurse shift summary, which basically categorizes the relevant information from the previous shift and saves them quite a bit of time. We are using the Vertex AI platform to do that. And in addition to everyone else under the sun, we have obviously delivered and brought live ambient scribe capabilities with AI platforms from a multitude of vendors, which has been successful for the company as well.

The concept of Google and the partnership remains strong. The results are clear with the vision that we had for Expanse Navigator. The progress continues around the LLMs, and what we’re seeing is great promise for the future of these technologies helping with administrative burdens and tasks, but also continued informed capacities to have clinicians feel strong and confident in the decisions they’re making.

The voice capabilities in the concept of agentic AI will clearly go far beyond ambient scribing, which is both exciting and ironic when you think about how the industry started with a pen way back when, we took them to keyboards, and then we took them to mobile devices, where they could tap and swipe with tablets and phones. Now we’re right back to voice, which I think will be pleasing provided it works efficiently and effectively for clinicians.

So if you read–not even between the lines but just what they are saying–Epic, which dominates AMCs and big non-profit health systems, and Meditech, the EMR for most big for-profit systems like HCA, are both building AI into their platforms for almost all of the workflow that most clinicians and administrators use.

I raised this issue a number of different ways at a meeting hosted by Commure, the General Catalyst-backed provider-focused AI company. Commure has been through a number of iterations in its 8 year life but it is now an AI platform on which it is building several products or capabilities. (For more here’s my interview with CEO Tannay Tandon). These include (so far!) administration, revenue cycle, inventory and staff tracking, ambient listening/scribing, clinical workflow, and clinical summarization. You can bet there’s more to come via development or acquisition. In addition Commure is doing this not only with the deep pocketed backing of General Catalyst but also with partial ownership from HCA–incidentally Meditech’s biggest client. That means HCA has to figure out what Commure is doing compared to Meditech.

Finally there’s also a ton of AI activity using the big LLMs internally within AMCs and in providers, plans and payers generally. Don’t forget that all these players have heavily customized many of the tools (like Epic) which external vendors have sold them. They are also making their AI vendors “forward deploy” engineers to customize their AI tools to the clients’ workflow. But they are also building stuff themselves. For instance Stanford just released a homegrown product that uses AI to communicate lab results to patients. Not bought from a vendor, but developed internally using Anthropic’s Claude LLM. There are dozens and dozens of these homegrown projects happening in every major health care enterprise. All those data scientists have to keep busy somehow!

So what does that say about the role of AI?

First it’s clear that the current platforms of record in health care–the EHRs–are viewing themselves as massive data stores and are expecting that the AI tools that they and their partners develop will take over much of the workflow currently done by their human users.

Second, the law of tech has usually been that water flows downhill. More and more companies and products end up becoming features on other products and platforms. You may recall that there used to be a separate set of software for writing (Wordperfect), presentation (Persuasion), spreadsheets (Lotus123) and now there is MS Office and Google Suite. Last month a company called Brellium raised $16m from presumably very clever VCs to summarize clinical notes and analyze them for compliance. Now watch them prove me wrong, but doesn’t it seem that everyone and their dog has already built AI to summarize and analyze clinical notes? Can’t one more analysis for compliance be added on easily? It’s a pretty good bet that this functionality will be part of some bigger product very soon.

(By the way, one area that might be distinct is voice conversation, which right now does seem to have a separate set of skills and companies working in it because interpreting human speech and conversing with humans is tricky. Of course that might be a temporary “moat” and these companies or their products may end up back in the main LLM soon enough).

Meanwhile, Vine Kuraitis, Girish Muralidharan & the late Jody Ranck just wrote a 3 part series on how the EMR is moving anyway towards becoming a bigger unified digital health platform which suggests that the clinical part of the EMR will be integrated with all the other process stuff going on in health systems. Think staffing, supplies, finance, marketing, etc. And of course there’s still the ongoing integration between EMRs and medical devices and sensors across the hospital and eventually the wider health ecosystem.

So this integration of data sets could quickly lead to an AI dominated super system in which lots of decisions are made automatically (e.g. AI tracking care protocols as Robbie Pearl suggested on THCB a while back), while some decisions are operationally made by humans (ordering labs or meds, or setting staffing schedules) and finally a few decisions are more strategic. The progress towards deep research and agentic AI being made by the big LLMs has caused many (possibly including Satya Nadella) to suggest that SaaS is dead. It’s not hard to imagine a new future where everything is scraped by the AI and agents run everything globally in a health system.

This leads to a real problem for every player in the health care ecosystem.

If you are buying an AI system, you don’t know if the application or solution you are buying is going to be cannibalized by your own EHR, or by something that is already being built inside your organization.

If you are selling an AI system, you don’t know if your product is a feature of someone else’s AI, or if the skill is in the prompts your customers want to develop rather than in your tool. And worse, there’s little penalty in your potential clients waiting to see if something better and cheaper comes along.

And this is happening in a world in which there are new and better LLM and other AI models every few months.

I think for now the issue is that, until we get a clearer understanding of how all this plays out, there will be lots of false starts, funding rounds that don’t go anywhere, and AI implementations that don’t achieve much. Reports like the one from Sofia Guerra and Steve Kraus at Bessmer may help, giving 59 “jobs to be done”. I’m just concerned that no one will be too sure what the right tool for the job is.

Of course I await my robot overlords telling me the correct answer.

Matthew Holt is the Publisher of THCB